Lab 2: Building a Travel Planner with a Simple LangGraph

Overview

This lab guides you through the process of creating a simple Travel Planner using LangGraph, a library for building stateful, multi-step applications with language models. The Travel Planner demonstrates how to structure a conversational AI application that collects user input and generates personalized travel itineraries.

What gets covered in this lab:

we wil cover these aspects below: - LangGraph constructs for how to build Agentic systems with Graph - Introduction to short term and long term memory for 'turn-by-turn' conversations

Intro to Agents

Agents are intelligent systems or components that utilize Large Language Models (LLMs) to perform tasks in a dynamic and autonomous manner. Here's a breakdown of the key concepts:

What Are Agents?

- Step-by-Step Thinking: Agents leverage LLMs to think and reason through problems in a structured way, often referred to as chain-of-thought reasoning. This allows them to plan, evaluate, and execute tasks effectively.

- Access to Tools: Agents can utilize external tools (e.g., calculators, databases, APIs) to enhance their decision-making and problem-solving capabilities.

- Access to Memory: Agents can store and retrieve context, enabling them to work on tasks over time, adapt to user interactions, and handle complex workflows.

Key characteristics of AI agents include:

Perception: The ability to gather information from their environment through sensors or data inputs. Decision-making: Using AI algorithms to process information and determine the best course of action. Action: The capability to execute decisions and interact with the environment or users. Learning: The ability to improve performance over time through experience and feedback. Autonomy: Operating independently to some degree, without constant human intervention. Goal-oriented: Working towards specific objectives or tasks.

Use Case Details

Our Travel Planner follows a straightforward, three-step process:

- Initial User Input:

- The application prompts the user to enter their desired travel plan to get assistance from AI Agent.

-

This information is stored in the state.

-

Interests Input:

- The user is asked to provide their interests for the trip.

-

These interests are stored as a list in the state.

-

Itinerary Creation:

- Using the collected city and interests, the application leverages a language model to generate a personalized day trip itinerary.

- The generated itinerary is presented to the user.

The flow between these steps is managed by LangGraph, which handles the state transitions and ensures that each step is executed in the correct order.

Setup and Imports

First, let's import the necessary modules and set up our environment.

# %pip install -U --no-cache-dir \

# "langchain==0.3.7" \

# "langchain-aws==0.2.6" \

# "langchain-community==0.3.5" \

# "langchain-text-splitters==0.3.2" \

# "langchainhub==0.1.20" \

# "langgraph==0.2.45" \

# "langgraph-checkpoint==2.0.2" \

# "langgraph-sdk==0.1.35" \

# "langsmith==0.1.140" \

# "pypdf==3.8,<4" \

# "ipywidgets>=7,<8" \

# "matplotlib==3.9.0" \

# "faiss-cpu==1.8.0"

# %pip install -U --no-cache-dir transformers

# %pip install -U --no-cache-dir boto3

# %pip install grandalf==3.1.2

import os

from typing import TypedDict, Annotated, List

from langgraph.graph import StateGraph, END

from langchain_core.messages import HumanMessage, AIMessage

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables.graph import MermaidDrawMethod

from IPython.display import display, Image

from dotenv import load_dotenv

import os

#load_dotenv()

LangGraph Basics

Key Components

- StateGraph

- This object will encapsulate the graph being traversed during excecution.

- The core of our application, defining the flow of our Travel Planner.

-

PlannerState, a custom type representing the state of our planning process.

-

Nodes

- In LangGraph, nodes are typically python functions.

- There are two main nodes we will use for our graph:

- The agent node: responsible for deciding what (if any) actions to take.

- The tool node: This node will orchestrate calling the respective tool and returning the output.

- Edges

- Defines how the logic is routed and how the graph decides to stop.

- Defines how your agents work and how different nodes communicate with each other.

-

There are a few key types of edges: - Normal Edges: Go directly from one node to the next. - Conditional Edges: Call a function to determine which node(s) to go to next. - Entry Point: Which node to call first when user input arrives. - Conditional Entry Point: Call a function to determine which node(s) to call first when user input arrives.

-

LLM Integration: Utilizing a language model to generate the final itinerary.

- Memory Integration: Utilizing long term and short term memory for conversations

Define Agent State

We'll define the state that our agent will maintain throughout its operation. First, define the State of the graph. The State schema serves as the input schema for all Nodes and Edges in the graph.

class PlannerState(TypedDict):

messages: Annotated[List[HumanMessage | AIMessage], "The messages in the conversation"]

itinerary: str

city: str

user_message: str

Set Up Language Model and Prompts

from langchain_aws import ChatBedrockConverse

from langchain_aws import ChatBedrock

import boto3

from langgraph.checkpoint.memory import MemorySaver

from langchain_core.runnables.config import RunnableConfig

from langchain_core.chat_history import InMemoryChatMessageHistory

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables.history import RunnableWithMessageHistory

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

# ---- ⚠️ Update region for your AWS setup ⚠️ ----

bedrock_client = boto3.client("bedrock-runtime", region_name="us-west-2")

model_id = "anthropic.claude-3-haiku-20240307-v1:0"

provider_id = "anthropic"

llm = ChatBedrockConverse(

model=model_id,

provider=provider_id,

temperature=0,

max_tokens=None,

client=bedrock_client,

)

itinerary_prompt = ChatPromptTemplate.from_messages([

("system", """You are a helpful travel assistant. Create a day trip itinerary for {city} based on the user's interests.

Follow these instructions:

1. Use the below chat conversation and the latest input from Human to get the user interests.

2. Always account for travel time and meal times - if its not possible to do everything, then say so.

3. If the user hasn't stated a time of year or season, assume summer season in {city} and state this assumption in your response.

4. If the user hasn't stated a travel budget, assume a reasonable dollar amount and state this assumption in your response.

5. Provide a brief, bulleted itinerary in chronological order with specific hours of day."""),

MessagesPlaceholder("chat_history"),

("human", "{user_message}"),

])

Define the nodes and Edges

We are adding the nodes, edges as well as a persistant memory to the StateGraph before we compile it. - user travel plans - invoke with Bedrock - generate the travel plan for the day - ability to add or modify the plan

def input_interests(state: PlannerState) -> PlannerState:

user_message = state['user_message'] #input("Your input: ")

#print(f"We are going to :: {user_message}:: for trip to {state['city']} based on your interests mentioned in the prompt....")

if not state.get('messages', None) : state['messages'] = []

return {

**state,

}

def create_itinerary(state: PlannerState) -> PlannerState:

response = llm.invoke(itinerary_prompt.format_messages(city=state['city'], user_message=state['user_message'], chat_history=state['messages']))

print("\nFinal Itinerary:")

print(response.content)

return {

**state,

"messages": state['messages'] + [HumanMessage(content=state['user_message']), AIMessage(content=response.content)],

"itinerary": response.content

}

Create and Compile the Graph

Now we'll create our LangGraph workflow and compile it.

- First, we initialize a StateGraph with the

Stateclass we defined above. - Then, we add our nodes and edges.

- We use the

STARTNode, a special node that sends user input to the graph, to indicate where to start our graph. - The

ENDNode is a special node that represents a terminal node.

workflow = StateGraph(PlannerState)

#workflow.add_node("input_city", input_city)

workflow.add_node("input_interests", input_interests)

workflow.add_node("create_itinerary", create_itinerary)

workflow.set_entry_point("input_interests")

#workflow.add_edge("input_city", "input_interests")

workflow.add_edge("input_interests", "create_itinerary")

workflow.add_edge("create_itinerary", END)

# The checkpointer lets the graph persist its state

# this is a complete memory for the entire graph.

memory = MemorySaver()

app = workflow.compile(checkpointer=memory)

Display the graph structure

Finally, we compile our graph to perform a few basic checks on the graph structure. We can visualize the graph as a Mermaid diagram.

display(

Image(

app.get_graph().draw_mermaid_png(

draw_method=MermaidDrawMethod.API,

)

)

)

Define the function that runs the graph

When we compile the graph, we turn it into a LangChain Runnable, which automatically enables calling .invoke(), .stream() and .batch() with your inputs. In the following example, we run stream() to invoke the graph with inputs

def run_travel_planner(user_request: str, config_dict: dict):

print(f"Current User Request: {user_request}\n")

init_input = {"user_message": user_request,"city" : "Seattle"}

for output in app.stream(init_input, config=config_dict, stream_mode="values"):

pass # The nodes themselves now handle all printing

Travel Planner Example

- To run this the system prompts and asks for user input for activities

- We have initialized the graph state with city Seattle which usually will be dynamic and we will see in subsequrnt labs

- You can enter like boating, swiming

config = {"configurable": {"thread_id": "1"}}

user_request = "Can you create a itinerary for a day trip in Seattle with boating and swimming options. Need a complete plan"

run_travel_planner(user_request, config)

Leverage the memory saver to manipulate the Graph State

- Since the

Conversation Messagesare part of the graph state we can leverage that - However the graph state is tied to

session_idwhich will be passed in as athread_idwhich ties to a session - If we add a request with different thread id it will create a new session which will not have the previous

Interests - However this this has the other check points variables as well and so this pattern is good for

A-Syncworkflow

config = {"configurable": {"thread_id": "1"}}

user_request = "Can you add white water rafting to this itinerary"

run_travel_planner(user_request, config)

Run with another session

Now this session will not have the previous conversations and we see it will create a new travel plan with the white water rafting interests, not boating or swim

config = {"configurable": {"thread_id": "11"}}

user_request = "Can you add white water rafting to itinerary"

run_travel_planner(user_request, config)

Memory

Memory is key for any agentic conversation which is Multi-Turn or Multi-Agent colloboration conversation and more so if it spans multiple days. The 3 main aspects of Agents are: 1. Tools 2. Memory 3. Planners

Explore External Store for memory

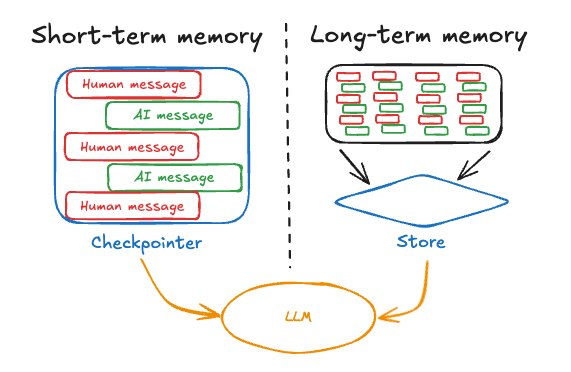

There are 2 types of memory for AI Agents, short term and long term memory which can be explained below. Further reading can be at this link

Conversation memory can be explained by this diagram below which explains the turn by turn conversations which needs to be accessed by agents and then saved as a summary for long term memory

Create an external Memory persistence

In this section we will leverage multi-thread, multi-session persistence to Chat Messages. Ideally you will leverage persistence like Redis Store etc to save messages per session

Memory Management

- We can have several Patterns - we can have each Agents with it's own Session memory

- Or we can have the whole Graph have a combined memory in which case each agent will get it's own memory

The MemorySaver or the Store have the concept of separating sections of memory by Namespaces or by Thread ID's and those can be leveraged to either 1/ Use the graph level message or memory 2/ Ecah agent can have it's own memory via space in saver or else having it's own saver like we do in the ReACT agent

from langgraph.store.base import BaseStore, Item, Op, Result

from langgraph.store.memory import InMemoryStore

from typing import Any, Iterable, Literal, NamedTuple, Optional, Union, cast

class CustomMemoryStore(BaseStore):

def __init__(self, ext_store):

self.store = ext_store

def get(self, namespace: tuple[str, ...], key: str) -> Optional[Item]:

return self.store.get(namespace,key)

def put(self, namespace: tuple[str, ...], key: str, value: dict[str, Any]) -> None:

return self.store.put(namespace, key, value)

def batch(self, ops: Iterable[Op]) -> list[Result]:

return self.store.batch(ops)

async def abatch(self, ops: Iterable[Op]) -> list[Result]:

return self.store.abatch(ops)

Quick look at how to use this store

in_memory_store = CustomMemoryStore(InMemoryStore())

namespace_u = ("chat_messages", "user_id_1")

key_u="user_id_1"

in_memory_store.put(namespace_u, key_u, {"data":["list a"]})

item_u = in_memory_store.get(namespace_u, key_u)

print(item_u.value, item_u.value['data'])

in_memory_store.list_namespaces()

Create the similiar graph as earlier -- note we will not have any mesages in the Graph state as that has been externalized

class PlannerState(TypedDict):

itinerary: str

city: str

user_message: str

def input_interests(state: PlannerState, config: RunnableConfig, *, store: BaseStore) -> PlannerState:

user_message = state['user_message'] #input("Your input: ")

return {

**state,

}

def create_itinerary(state: PlannerState, config: RunnableConfig, *, store: BaseStore) -> PlannerState:

#- get the history from the store

user_u = f"user_id_{config['configurable']['thread_id']}"

namespace_u = ("chat_messages", user_u)

store_item = store.get(namespace=namespace_u, key=user_u)

chat_history_messages = store_item.value['data'] if store_item else []

print(user_u,chat_history_messages)

response = llm.invoke(itinerary_prompt.format_messages(city=state['city'], user_message=state['user_message'], chat_history=chat_history_messages))

print("\nFinal Itinerary:")

print(response.content)

#- add back to the store

store.put(namespace=namespace_u, key=user_u, value={"data":chat_history_messages+[HumanMessage(content=state['user_message']),AIMessage(content=response.content)]})

return {

**state,

"itinerary": response.content

}

in_memory_store_n = CustomMemoryStore(InMemoryStore())

workflow = StateGraph(PlannerState)

#workflow.add_node("input_city", input_city)

workflow.add_node("input_interests", input_interests)

workflow.add_node("create_itinerary", create_itinerary)

workflow.set_entry_point("input_interests")

#workflow.add_edge("input_city", "input_interests")

workflow.add_edge("input_interests", "create_itinerary")

workflow.add_edge("create_itinerary", END)

app = workflow.compile(store=in_memory_store_n)

def run_travel_planner(user_request: str, config_dict: dict):

print(f"Current User Request: {user_request}\n")

init_input = {"user_message": user_request,"city" : "Seattle"}

for output in app.stream(init_input, config=config_dict, stream_mode="values"):

pass # The nodes themselves now handle all printing

config = {"configurable": {"thread_id": "1"}}

user_request = "Can you create a itinerary for a day trip in california with boating and swimming options. I need a complete plan that budgets for travel time and meal time."

run_travel_planner(user_request, config)

config = {"configurable": {"thread_id": "1"}}

user_request = "Can you add itinerary for white water rafting to this"

run_travel_planner(user_request, config)

Quick look at the store

it will show the History of the Chat Messages

print(in_memory_store_n.list_namespaces())

print(in_memory_store_n.get(('chat_messages', 'user_id_1'),'user_id_1').value)

Finally we review the concept of having Each Agent be backed by it's own memory

For this we will leverage the RunnableWithMessageHistory when creating the agent - Here we create to simulate a InMemoryChatMessageHistory, but this will be externalized in produftion use cases - use this this as a sample

from langchain_core.chat_history import InMemoryChatMessageHistory

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables.history import RunnableWithMessageHistory

# ---- ⚠️ Update region for your AWS setup ⚠️ ----

bedrock_client = boto3.client("bedrock-runtime", region_name="us-west-2")

model_id = "anthropic.claude-3-haiku-20240307-v1:0"

#model_id = "anthropic.claude-3-sonnet-20240229-v1:0"#

#model_id="anthropic.claude-3-5-sonnet-20240620-v1:0"

provider_id = "anthropic"

chatbedrock_llm = ChatBedrockConverse(

model=model_id,

provider=provider_id,

temperature=0,

max_tokens=None,

client=bedrock_client,

# other params...

)

itinerary_prompt = ChatPromptTemplate.from_messages([

("system", """You are a helpful travel assistant. Create a day trip itinerary for {city} based on the user's interests.

Follow these instructions:

1. Use the below chat conversation and the latest input from Human to get the user interests.

2. Always account for travel time and meal times - if its not possible to do everything, then say so.

3. If the user hasn't stated a time of year or season, assume summer season in {city} and state this assumption in your response.

4. If the user hasn't stated a travel budget, assume a reasonable dollar amount and state this assumption in your response.

5. Provide a brief, bulleted itinerary in chronological order with specific hours of day."""),

MessagesPlaceholder("chat_history"),

("human", "{user_message}"),

])

chain = itinerary_prompt | chatbedrock_llm

history = InMemoryChatMessageHistory()

def get_history():

return history

wrapped_chain = RunnableWithMessageHistory(

chain,

get_history,

history_messages_key="chat_history",

)

class PlannerState(TypedDict):

itinerary: str

city: str

user_message: str

def input_interests(state: PlannerState, config: RunnableConfig, *, store: BaseStore) -> PlannerState:

user_message = state['user_message'] #input("Your input: ")

return {

**state,

}

def create_itinerary(state: PlannerState, config: RunnableConfig, *, store: BaseStore) -> PlannerState:

#- each agent manages it's memory

response = wrapped_chain.invoke({"city": state['city'], "user_message": state['user_message'], "input": state['user_message']} )

print("\nFinal Itinerary:")

print(response.content)

return {

**state,

"itinerary": response.content

}

workflow = StateGraph(PlannerState)

#workflow.add_node("input_city", input_city)

workflow.add_node("input_interests", input_interests)

workflow.add_node("create_itinerary", create_itinerary)

workflow.set_entry_point("input_interests")

#workflow.add_edge("input_city", "input_interests")

workflow.add_edge("input_interests", "create_itinerary")

workflow.add_edge("create_itinerary", END)

app = workflow.compile()

def run_travel_planner(user_request: str, config_dict: dict):

print(f"Current User Request: {user_request}\n")

init_input = {"user_message": user_request,"city" : "Seattle"}

for output in app.stream(init_input, config=config_dict, stream_mode="values"):

pass # The nodes themselves now handle all printing

config = {"configurable": {"thread_id": "1"}}

user_request = "Can you create a itinerary for boating, swim. Need a complete plan"

run_travel_planner(user_request, config)

user_request = "Can you add white water rafting to this itinerary"

run_travel_planner(user_request, config)

Conclusion

You have successfully executed a simple LangGraph implementation, this lab demonstrates how LangGraph can be used to create a simple yet effective Travel Planner. By structuring our application as a graph of interconnected nodes, we achieve a clear separation of concerns and a easily modifiable workflow. This approach can be extended to more complex applications, showcasing the power and flexibility of graph-based designs in AI-driven conversational interfaces.

Please proceed to the next lab