CloudTrail Data Events

According to the AWS CloudTrail best practices, you should record data events for security-sensitive workloads at a multi-region trail level. For workloads with intensive compliance requirements, we recommend that you enable data events to audit access resource level activity. Logging data events provides the ability to audit at the data level, including changes inside the resource you are enabling visibility.

CloudTrail helps by providing added observability and supports data events for a wide variety of services. These data events can be used to help you meet your critical compliance, risk, and security objectives. Some examples of these type of events include object-level API activities such as delete, update, and put items. Examples of the enhanced visibility provided by CloudTrail data events include API activity on an agent alias or knowledge base in Amazon Bedrock, activity on an application or data source in Amazon Q Business, or Sagemaker API activity on a feature store. These deliver key risk management benefits such as:

- Monitoring access to personal data and sensitive information

- Visibility into personal data and sensitive data modifications

- Auditing activities in applications that handle personal data and sensitive information

- Detecting potential data breaches and privacy incidents

- Facilitating privacy audits and compliance reporting*

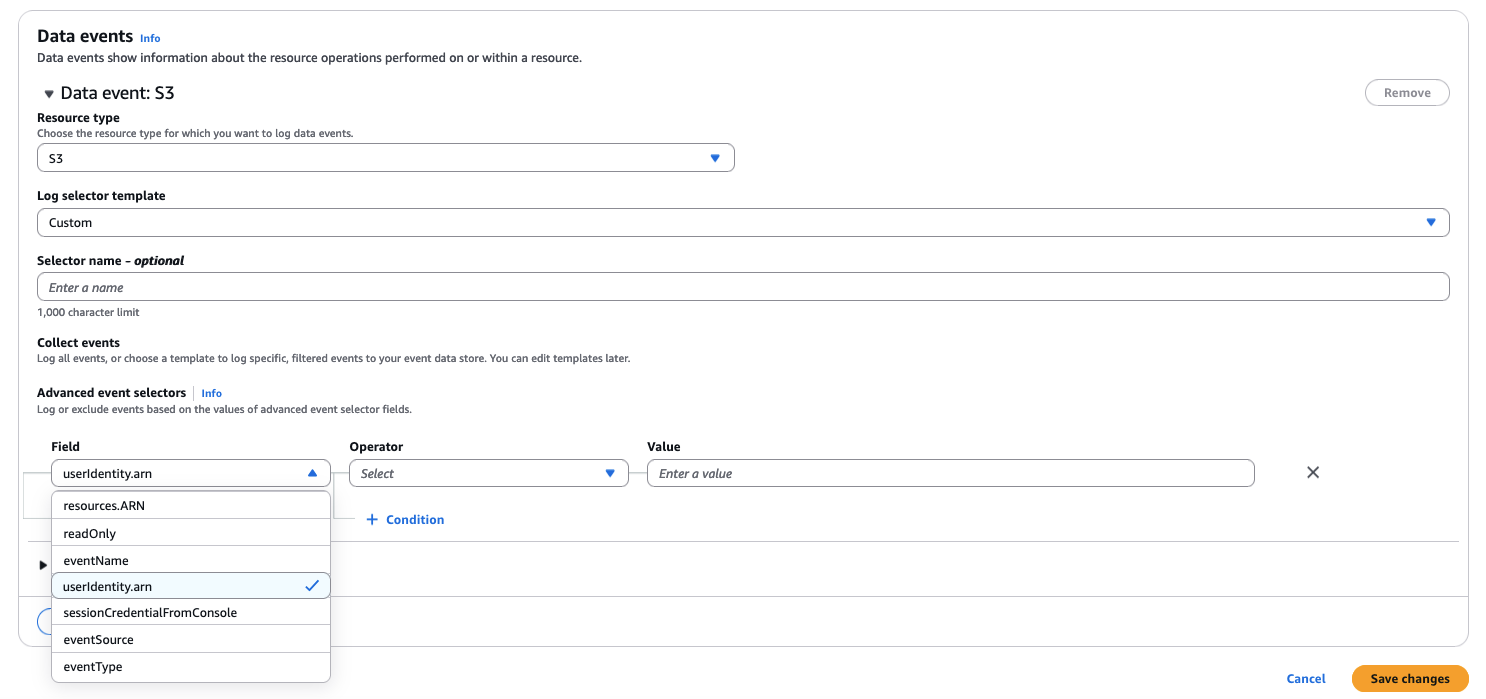

Advanced Event Selectors for Data Events

When you use data events, advanced event selectors offer greater control over which CloudTrail events are ingested into your event data stores. With advanced event selectors, you can include or exclude values on fields such as EventSource, EventName, userIdentity.arn, and ResourceARN. Advanced event selectors also support including or excluding values with pattern matching on partial strings. This increases the efficiency and precision of your security, compliance, and operational investigations while helping reduce costs. For example, you can filter CloudTrail events based on the userIdentity.arn attribute to exclude events generated by specific IAM roles or users. You can exclude a dedicated IAM role used by a service that performs frequent API calls for monitoring purposes. This allows you to significantly reduce the volume of CloudTrail events ingested into CloudTrail Lake, lowering costs while maintaining visibility into relevant user and system activities.

Workload Specific

The following sections will provide some best practices on monitoring and auditing specific workloads using the resource types available for trails and event data stores. For example, when logging data events for Amazon S3 you would want to capture PutObject API operations to record all resource level operations that are being made to Amazon S3 objects. This would provide visibility on actions done at the resource level for Amazon S3 objects.

Amazon SNS and Amazon SQS

According to the Amazon SNS security best practices and Amazon SQS security best practices it recommends to consider using VPC endpoints to access Amazon SNS and Amazon SQS. For example, if you have Amazon SNS topics or Amazon SQS queues that you must be able to interact with, but which must absolutely not be exposed to the internet, using VPC endpoints to control access to only the hosts within a particular VPC to publish or send messages to an Amazon SNS topic or Amazon SQS queue.

Enabling data events for Amazon SNS in CloudTrail you will be able to audit Publish and PublishBatch API actions for all your Amazon SNS topics. Similarly, for data events for Amazon SQS ensure that the SendMessage API action will audit that VPC Endpoints are being used when sending messages to Amazon SQS queues from instances within your VPC, without traversing the internet.

The below query will show API actions for the Publish events from Amazon SNS, indicating messages where being published to an Amazon SNS topic. The results will also show which IAM entity took this action and the specific VPC endpoint ID the message was sent. However, if there is no VPC endpoint ID then the Publish API call was made without using a VPC Endpoint.

SQL Query:

SELECT eventTime,

substr(userIdentity.arn, strpos(userIdentity.arn, '/') +1) as IAM,

recipientAccountId,

awsRegion,

eventName,sourceIPAddress,

substr(element_At(requestParameters, 'topicArn'),

strpos(element_At(requestParameters, 'topicArn'), '.com/') +18) as Topic,

vpcEndpointId

FROM $EDS_ID

WHERE eventSource = 'sns.amazonaws.com'

AND eventName = 'Publish'

AND eventtime >= '2024-06-24 00:00:00'

AND eventtime <= '2024-06-24 23:59:59'

Similarly as what was shown for the Amazon SNS query, the below query will show API actions for the SendMessage events from Amazon SQS, indicating messages were being sent to a specific Amazon SQS queue. These results will also show which IAM entity took the action and the specific VPC endpoint ID to which the message was sent.

SQL Query:

SELECT eventTime,

substr(userIdentity.arn, strpos(userIdentity.arn, '/') +1) as IAM,

recipientAccountId,

awsRegion,

eventName,sourceIPAddress,

substr(element_At(requestParameters, 'queueUrl'),

strpos(element_At(requestParameters, 'queueUrl'), '.com/') +18) as Queue,

vpcEndpointId

FROM $EDS_ID

WHERE eventSource = 'sqs.amazonaws.com'

AND eventName = 'SendMessage'

AND eventtime >= '2024-06-24 00:00:00'

AND eventtime <= '2024-06-24 23:59:59'

Amazon Q for Business

For Amazon Q for Business workloads you can configure the data events for AWS::QBusiness::Application and AWS::S3::Object. The AWS::QBusiness::Application logs data plane activities related to our Amazon Q Business application and the AWS::S3::Object records data events for the source Amazon S3 bucket. Once the data events are configured for your trail or event data store, then the events will start to be generated for Amazon Q Business and Amazon S3.

The below query will show API calls to BatchDeleteDocument indicating deletion of one or more documents which was used in our Amazon Q Business Application.

SQL Query:

SELECT

eventName, COUNT(*) AS numberOfCallsFROM

<event-data-store-ID>

WHERE

eventSource='qbusiness.amazonaws.com' AND eventTime > date_add('day', -1, now())

Group

BY eventName ORDER BY COUNT(*) DESC

The below query will help to find the IAM identity associated with the BatchDeleteDocument API call.

SQL Query:

SELECT

sourceIPAddress, eventTime, userIdentity.principalid

FROM

<event-data-store-ID>

WHERE

eventName='BatchDeleteDocument' AND eventTime > date_add('day', -1, now())

The below query which job triggered the synchronization of the S3 data source with the Amazon Q Business Applications by looking for the StartDataSourceSyncJob API call.

SQL Query:

SELECT

sourceIPAddress, eventTime, userIdentity.arn AS user

FROM

<event-data-store-ID>

WHERE

eventName='StartDataSourceSyncJob' AND eventTime > date_add('day', -1, now())

The following query will show if any objects were deleted from the S3 bucket that is connected as a data source to our Q business application by checking for DeleteObject API event:

SQL Query:

SELECT

sourceIPAddress, eventTime, userIdentity.arn AS user

FROM

<event-data-store-ID>

WHERE

userIdentity.arn IS NOT NULL AND eventName='DeleteObject'

AND element_at(requestParameters, 'bucketName') like '<enter-S3-bucket-name>'

AND eventTime > '[2024-05-09 00](tel:2024050900):00:00’

Amazon Q Developer

One of the events you can track using data events for Amazon Q Developer is ‘StartCodeAnalysis’, which tracks security scans performed by Amazon Q Developer for VS Code and JetBrains IDEs.

The below query we will retrieve a list of all users who initiated a security scan. This will help identify which users utilize Amazon Q Developer to analyze code within your organization and determine the source of their requests

SELECT

userIdentity.onbehalfof.userid, eventTime, SourceIPAddress

FROM

<event-data-store-ID>

WHERE

eventName = 'StartCodeAnalysis'

Amazon Bedrock

CloudTrail data events for Amazon Bedrock track API events for Agents for Bedrock and Amazon Bedrock knowledge bases through the ‘AWS::Bedrock::AgentAlias’ and ‘AWS::Bedrock::KnowledgeBase’ resource type actions.

For example, if the administrator of a chat application wants to audit the events related to Bedrock agents’ invocation, they can use the following query, which will help determine the details on request and response parameters sent along with the invoked agent alias.

SELECT

UserIdentity,eventTime,eventName,UserAgent,requestParameters,resourcesFROM

<event-data-store-ID>

WHERE

eventName = 'InvokeAgent'

Additionally, the below query will provide details on the invoked knowledge base, along with the returned request and response parameters:

SELECT

UserIdentity,eventTime,eventName,UserAgent,requestParameters,resourcesFROM

<event-data-store-ID>

WHERE

eventName = 'RetrieveAndGenerate'