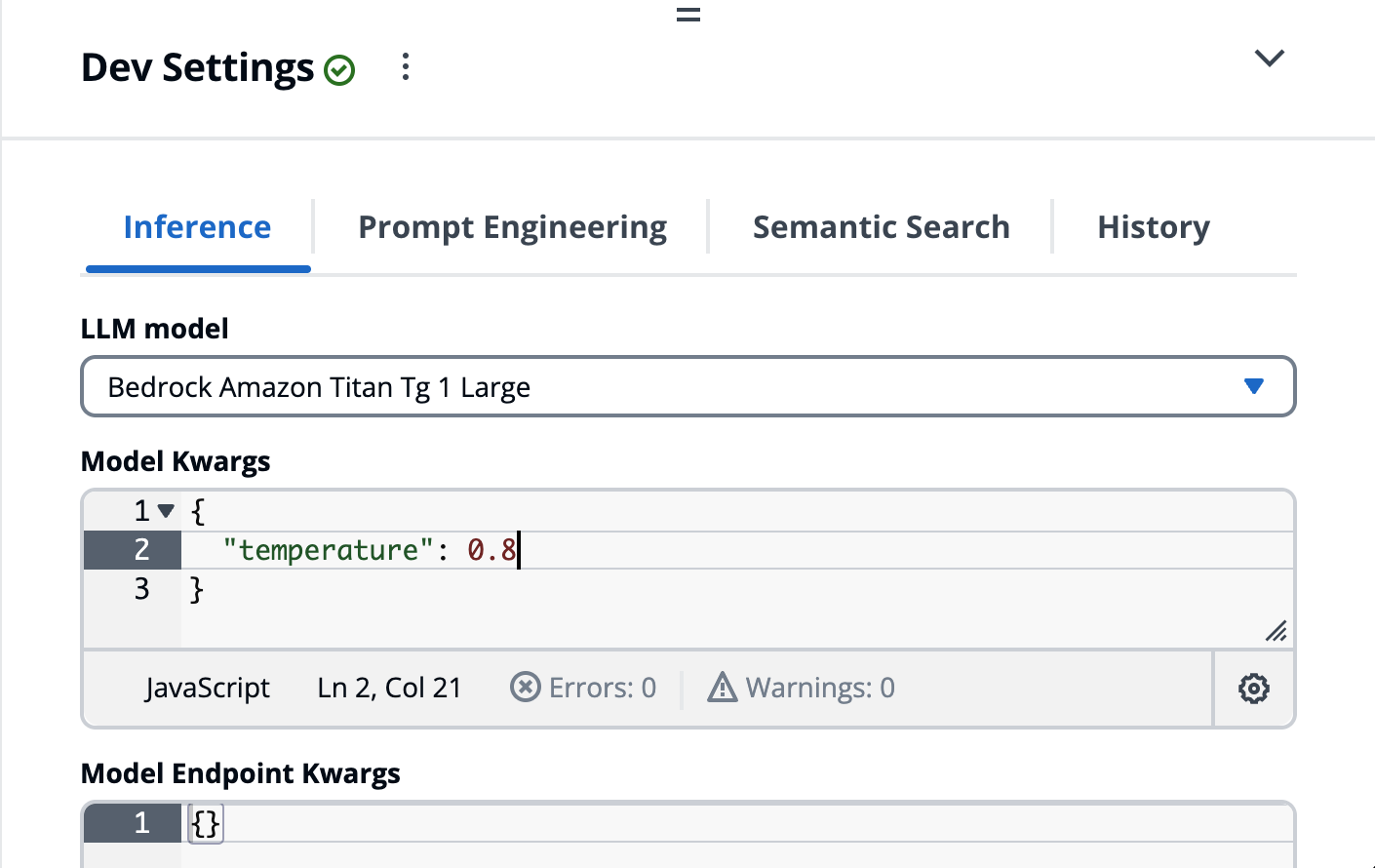

Inference (Dev Settings)

Disclaimer: Use of Third-Party Models

By using this sample, you agree that you may be deploying third-party models (“Third-Party Model”) into your specified user account. AWS does not own and does not exercise any control over these Third-Party Models. You should perform your own independent assessment, and take measures to ensure that you comply with your own specific quality control practices and standards, and the local rules, laws, regulations, licenses and terms of use that apply to you, your content, and the Third-Party Models, and any outputs from the Third-Party Models. AWS does not make any representations or warranties regarding the Third-Party Models.

The inference settings are used to control the model that is used for inference. It supports choosing either one of the models deployed/configured with the solution, or integrating with a custom model external to the solution.

This enables testing different configurations, such as Model Kwargs and Endpoint Kwargs without requiring a deployment. And in the case of custom models, you can test a model deployed outside of the solution (e.g. SageMaker JumpStart), or modify the additional configuration of one of the deployed frameworks.

Custom Model Integration

Most approaches to model integration define backend adapters for each type of model (Falcon, Llama2, etc) which requires writing and deploying code before testing a new model within the full application. Our goals is to support trying out any model without out requiring any code and/or deployment, which drove us to implementing a dynamic adapter system that is model agnostic and completely serializable. This enables developers to define model adapters at runtime from the UI via the Dev Settings.

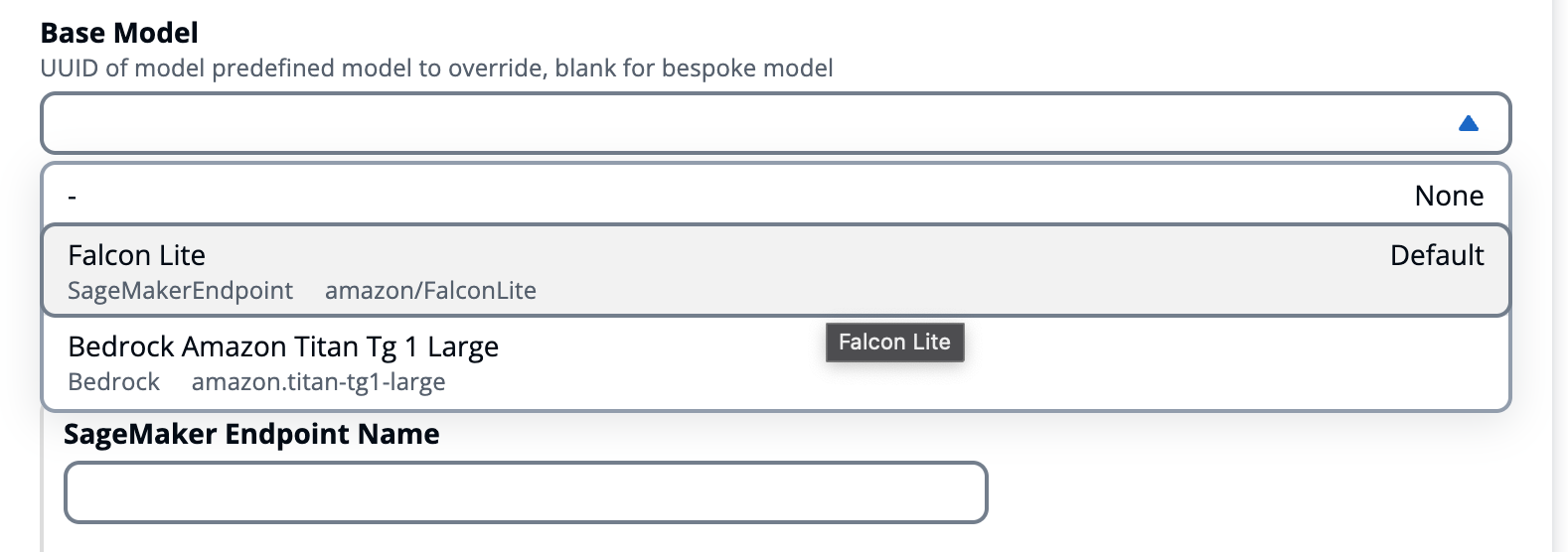

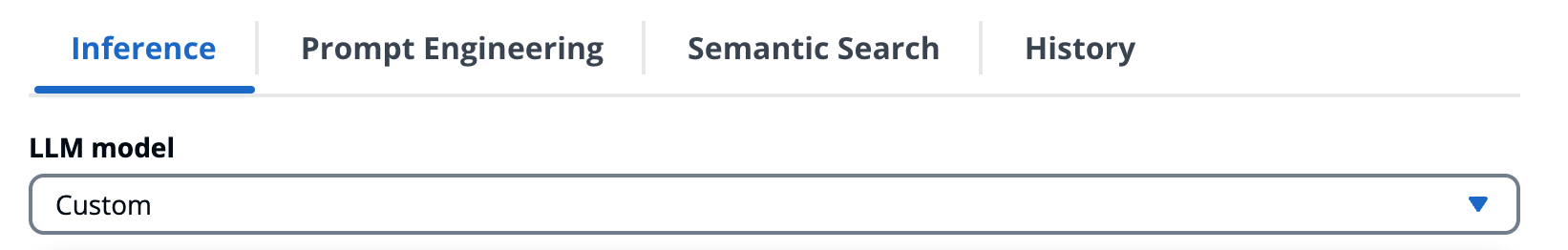

Choose Custom from the LLM model dropdown to integrate with a model deployed outside of the application.

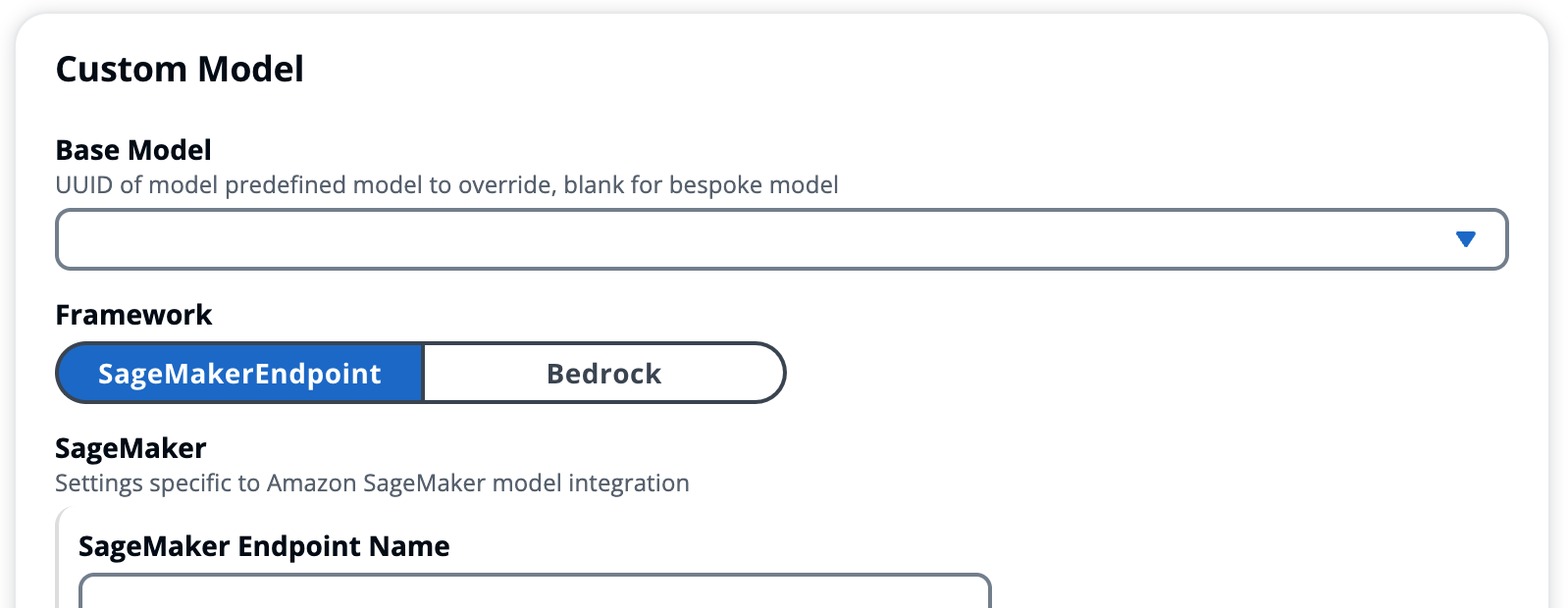

That will expand a set of fields that allow you to integrate with any model deployed on the supported models.

You can either overwrite the configuration of a predefined model that is deployed, or start from scratch (None).

- When you select a predefined model from the Base Model list, it will populate the fields with the values of that model

Common Settings

The following fields are common settings across the integration abstractions

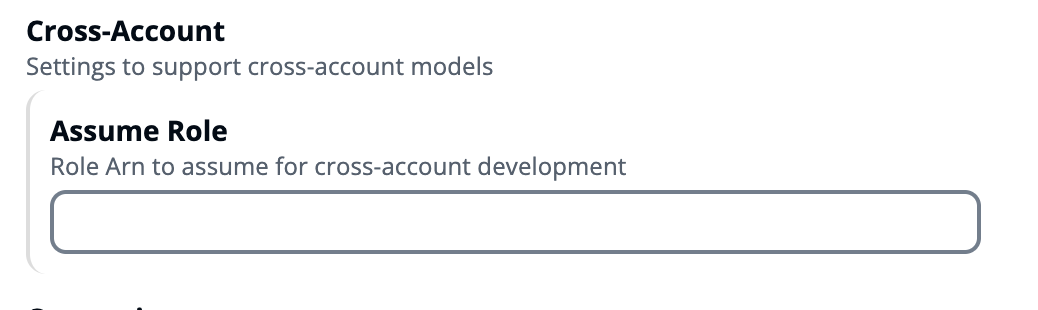

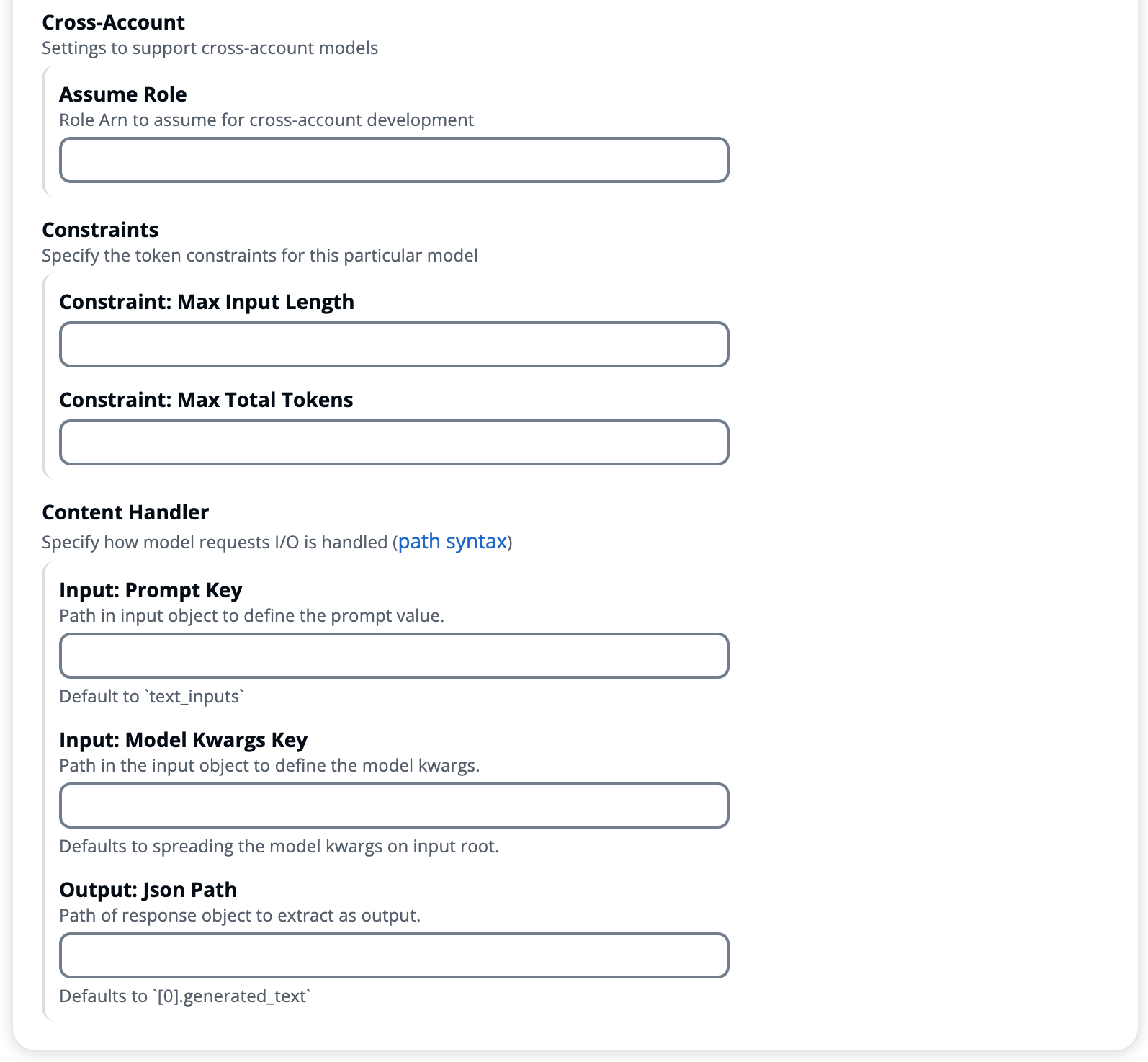

Cross-Account Model Integration

By providing a cross-account IAM role to assume, when the inference engine invokes the framework via AWS SDK, it will first assume the role provided and use those temporary credentials to invoke the service endpoint. The role must have a trust policy attached that grants the application environment account permissions to assume the roles (sts:AssumeRole).

Cross-Account Security Considerations

By default the application in configured to only support assuming cross-account roles in the Dev Stage environment, and only Administrators are allowed to pass runtime config overrides to specify cross-account roles to assume. Ensure that only trusted individuals are granted Administrator access, and if enabling this functionality outside of Dev Stage environment that precautions and diligence are taken securing access of data and systems.

Trust Policy Example

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": [

// the account that is allowed to assume this role

"arn:aws:iam::${TrustedAccountId}:root"

]

},

"Action": "sts:AssumeRole",

"Condition": {}

}

]

}

SageMaker Cross-Account Role Policy Example

Resource types defined by Amazon SageMaker

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "InvokeSagemaker",

"Effect": "Allow",

"Action": [

// InvokeEndpoint + InvokeEndpointAsync

"sagemaker:InvokeEndpoint*"

],

"Resource": [

// any model endpoint

"arn:aws:sagemaker:${Region}:${Account}:endpoint/*",

// specific model endpoint

"arn:aws:sagemaker:${Region}:${Account}:endpoint/${EndpointName}",

]

}

]

}

Bedrock Cross-Account Role Policy Example

Resource types defined by Amazon Bedrock

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "bedrock:Invoke*",

"Resource": [

// any foundation model

"arn:aws:bedrock:${Region}::foundation-model/*",

// specific foundation model

"arn:aws:bedrock:${Region}::foundation-model/${ModelId}",

// custom model

"arn:aws:bedrock:${Region}:${Account}:custom-model/${ResourceId}"

],

"Effect": "Allow"

}

]

}

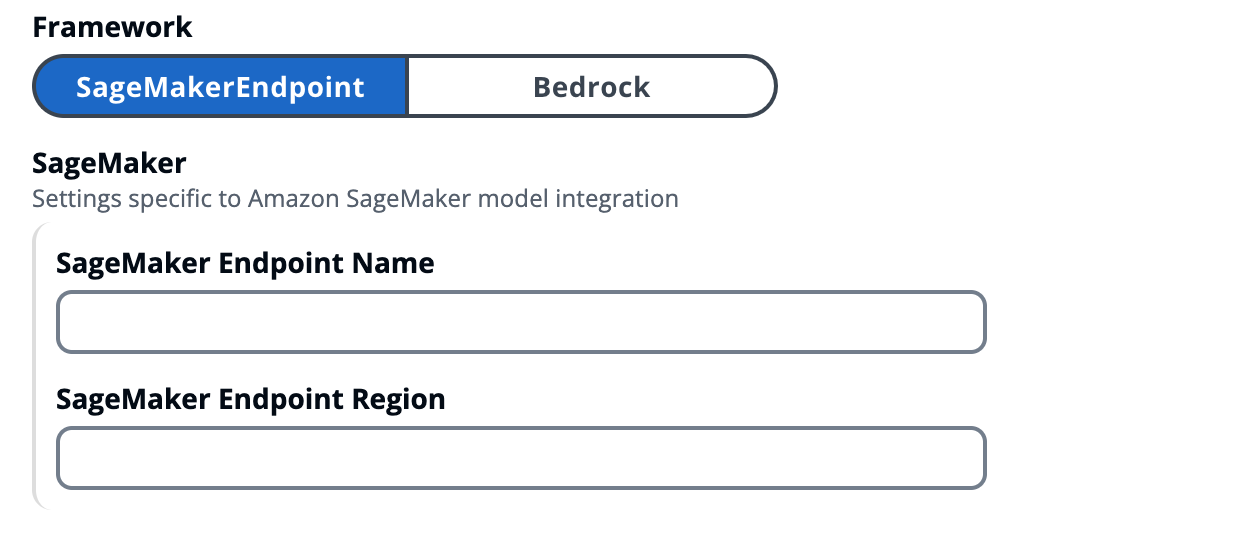

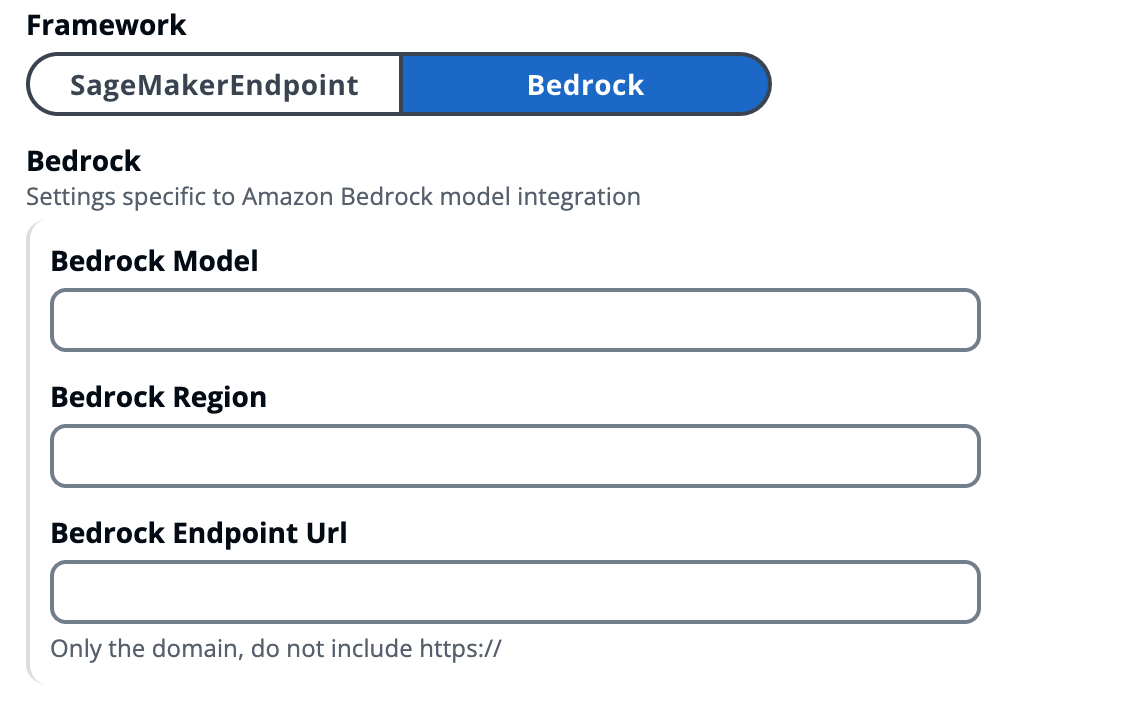

Service Specific Model Settings

The following are fields specific to each model hosting service