Prompt Engineering (Dev Settings)

Disclaimer: Use of Prompt Engineering Templates

Any prompt engineering template is provided to you as AWS Content under the AWS Customer Agreement, or the relevant written agreement between you and AWS (whichever applies). You should not use this prompt engineering template in your production accounts, or on production, or other critical data. You are responsible for testing, securing, and optimizing the prompt engineering as appropriate for production grade use based on your specific quality control practices and standards.

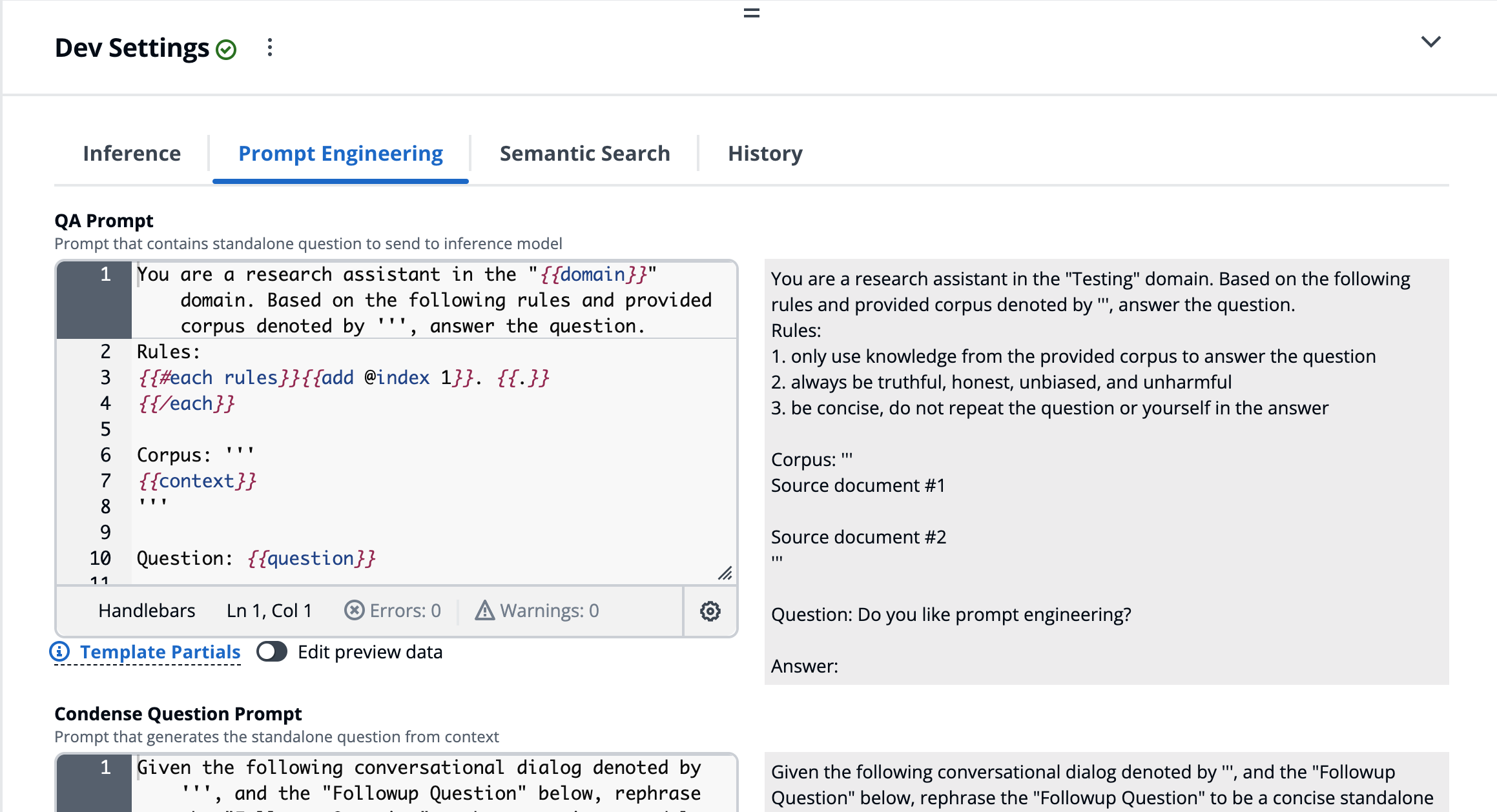

Prompt engineering is crucial in programming for generative AI applications. Sometime a simple tweaking of verbiage or order of statements makes all the difference, while other times the model might require a very bespoke format. To support rapid iterations and rich editing capabilities when developing prompts for different models, the prompt templates for the solution are implemented with Handlebars. This enable more granular control over rendering of data, such as chat history messages, which may require very specific markup based on the target LLM, along with extensibility and composability of template fragments (or partials). The solution is designed to provide the greatest flexibility without trading off reusability.

In addition to the built-in helpers provided by handlebars, the template system also supports Strings and Math helpers.

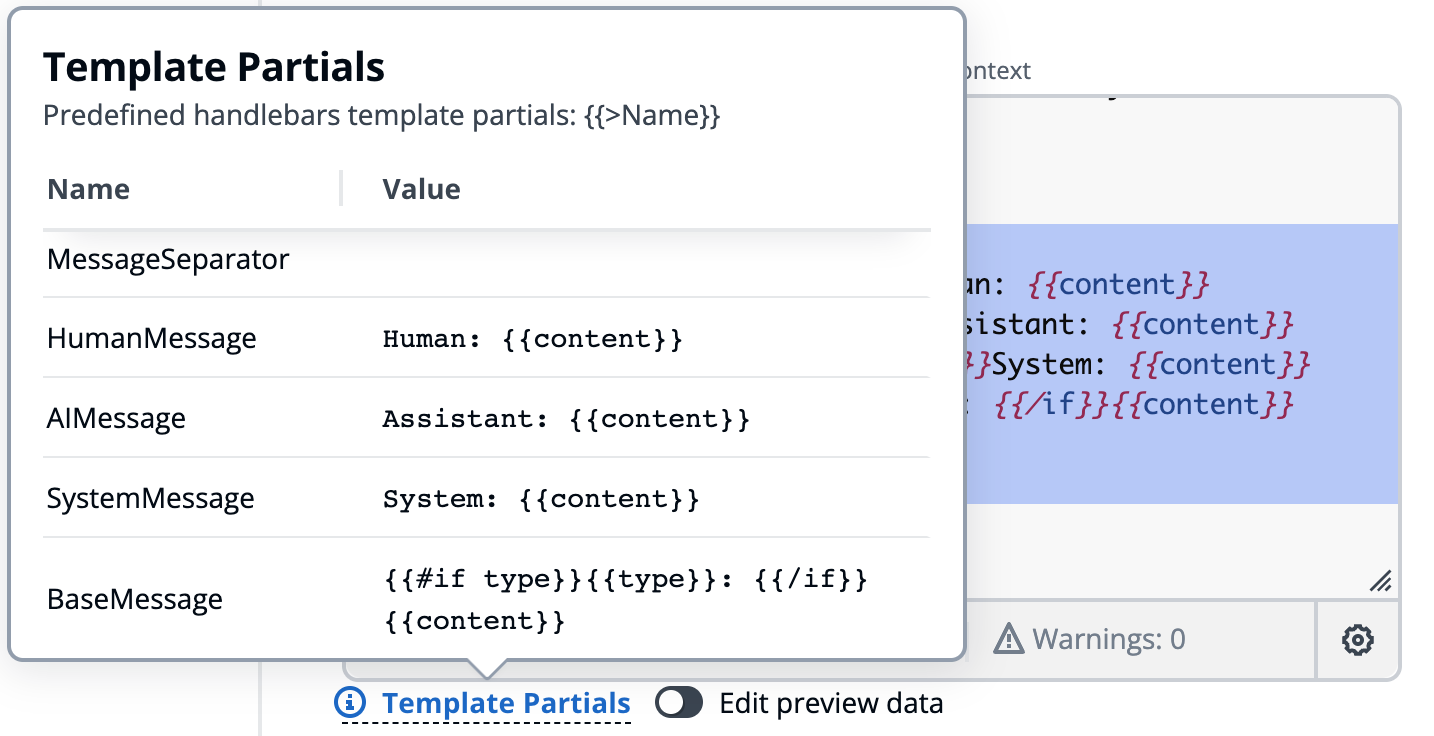

Each template has predefined template partials for composing more reusable templates. The partials are primarily used for minimizing requirements for codifying reusable model adapter, however they can still be used through the Dev Settings as well.

Prompt Templates

The solution is a modified version of LangChain Conversational Retrieval QA chain, which chains together two prompt templates.

-

Question Generator (Condense Question)

Generates a standalone question based on the chat history and a followup question. The standalone question that it generates is then used to query the database (retrieval) for similar documents that make up the corpus (context) of knowledge. This process condenses the chat history into a single standalone question that both reduces the length of QA prompt input and also support contextual followup questions.

As an example, if the chat history asked "Please summarize the TWA Flight 800 crash case", with a followup question of "Why was this case significant?", the standalone question would be something like "Why was the TWA Flight 800 crash case significant?". The replacement of this in the followup question is inferred from the chat history and makes the followup question able to be asked in isolation without the chat history.

Variable Type Description question*string The raw followup question from user chat_history*BaseMessage[] Array of BaseMessages with typegetter added. -

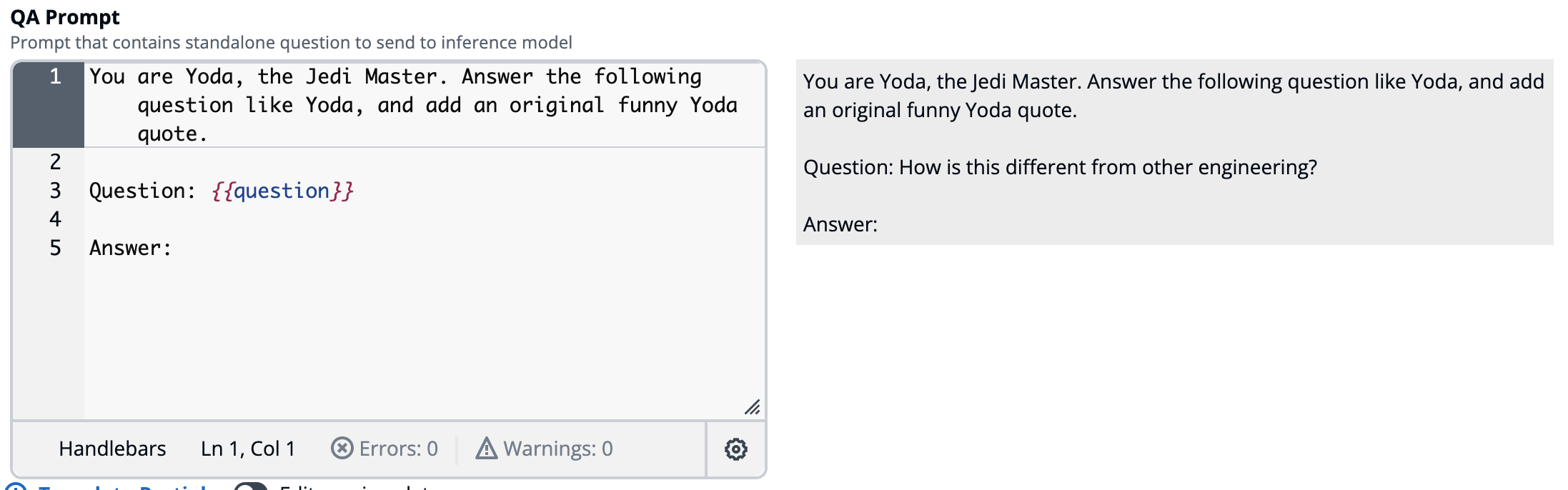

Question Answer (QA)

Prompts the LLM to answer a standalone question based on provided corpus (context). The response from this prompt is what is returned to the user and constitutes the answer from the model.

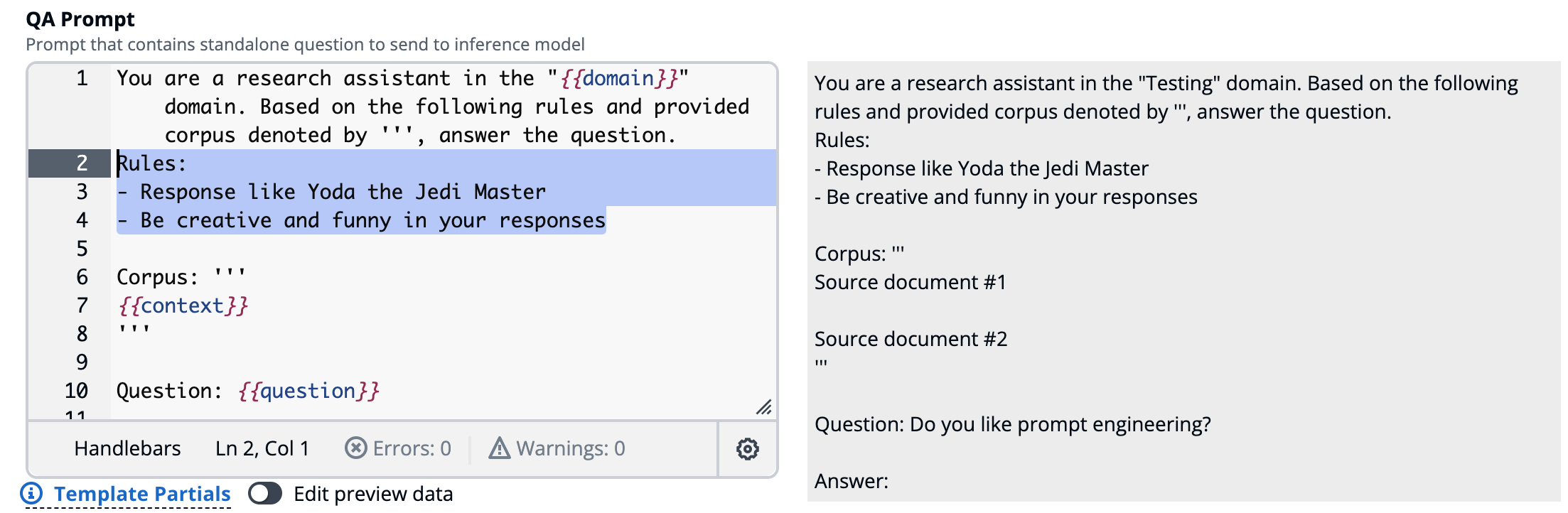

Variable Type Description question*string The standalone question generated above context*string The corpus of knowledge in a concatenated string rulesstring[] Array of string to further control the model handling Chat History

Notice that

chat_historyis not provided to the QA prompt. This is because the Condense Question prompt has included the relevant chat history into a condensed standalone prompt that is independent of the chat history. This reduces the size of this prompt while also reducing the load on the model.

Editor

After opening the Dev Settings panel on the bottom, click the Prompt Engineering tab.

The prompt template that you see in the editors is a flattened version of the corresponding template for the specific prompt. By default, all prompt template definitions are actually just {{>Layout}}, which is just the Layout partial, which is also just partials {{>Header}}{{>Body}}{{>Footer}}, and so on for compatibility.

Flattening of templates is process of replacing all partials with their raw values.

You can completely modify the template values to whatever you like

Or just parts of it, such are replacing the rules

How to replace chat message format?

The chat_history data is an array of LangChain BaseMessages, with type property getter added for convenience.

The {{>Dialog}} partial will render each message based on the message type.

{{#each chat_history}}

{{~#if (eq type "human")}}{{>HumanMessage}}

{{~else if (eq type "ai")}}{{>AIMessage}}

{{~else if (eq type "system")}}{{>SystemMessage}}

{{~else}}{{>BaseMessage}}

{{/if}}

{{/each}}

The flattened version of the {{>Dialog}} partial looks like this.

{{#each chat_history}}

{{~#if (eq type "human")}}Human: {{content}}

{{~else if (eq type "ai")}}Assistant: {{content}}

{{~else if (eq type "system")}}System: {{content}}

{{~else}}{{#if type}}{{type}}: {{/if}}{{content}}

{{/if}}

{{/each}}

Partial Replacement

To support extensibility and composability, especially when codifying model adapters, partials can be overwritten either inline within the template or by the template definition in the code. In the Dev Settings only the inline partial replacement is supported and uses Handlebars Inline Partials feature. Any predefined template partial can be replaced using this feature, along with ability to define new partials.

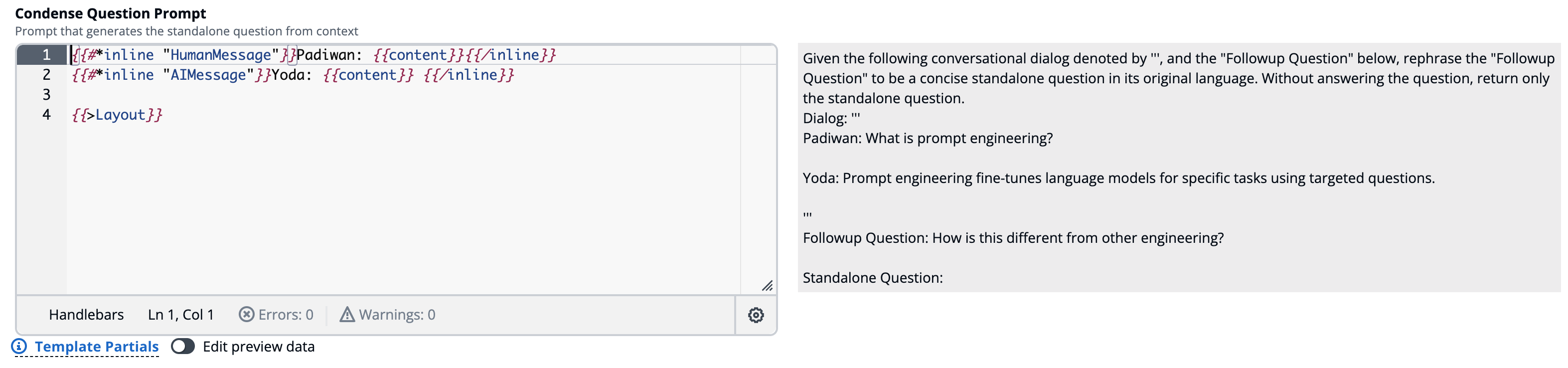

Overwrite messages using inline partials

Example of overwriting just the `HumanMessage` and `AIMessage` partials.

```handlebars

{{#*inline "HumanMessage"}}Padiwan: {{content}}{{/inline}}

{{#*inline "AIMessage"}}Yoda: {{content}} {{/inline}}

{{>Layout}}

```

Model Adapters

When it comes time to codify your prompt templates into model adapters, it is recommended to overwrite existing partials in adapter config, and/or use inline partials in based template. Doing the will enable your model adapter to receive updates for the rest of the template definitions not intended to be customized for the model. As example, if you only need to modify how the messages for chat history are rendered, if you only overwrite the HumanMessage and AIMessage partials, when the other partials (Instruction, Messages, etc) are modified the new values will be rendered for the model adapter as well. In comparison, if you simply overwrite the root template it will be completely decoupled and not receive updates.